HDR Rendering

Generally, when you look at some image in the screen, this image is rendered in a low dynamic range, as it is usually uses an 8-bit RGB color model, in which each color is represented as a sum of three components: red, green, blue. Each component, in its turn, can take a value from 0 to 255, effectively occupying one byte. Such model and the other ones, which allocate small ranges of values per component, has a ratio of the maximal intensity to the minimal one equal to 256:1. This ratio is called a dynamic range. As you will see further, this dynamic range is rather low.

On the other side, the human eye has an absolute dynamic range of 1014:1. Though at any given time the eye's dynamic range is smaller, around 104:1, the eye gradually adapts to a different part of the range, if necessary. This is what happens when you, for example, go out the sunlit street from the dark room.

The difference between what the LDR model described above can represent and what the human eye can see is evident. That is why now there is a shift to using HDR models that provide more possible values per each component, and therefore, extend the dynamic range.

HDR models in real-time rendering of 3D graphics were introduced not long ago, when the hardware finally became capable of applying such models to images. The goal of the HDR rendering is to keep details in large contrast differences and to accurately preserve light. To achieve that intensity and light are described using actual physical values or the values linearly proportional to them. Of course, integers are replaced with floating point numbers. This allows, for instance, storing appropriately high values for bright light sources and keeping the reflected light value relatively high, too. The usual procedure of the HDR rendering is as follows:

- A scene is rendered in the floating point buffer.

- This image is post-processed in the high dynamic range. This may include contrast and color balance adjustment and applications of effects.

- The processed image is transformed using tone mapping to be displayed on the screen.

Unigine provides two algorithms to calculate average luminance (controlled by a render_hdr console variable):

- Logarithmic algorithm is more widespread and accurate. However, it cannot simulate glare, unless the object is very bright and of the great area, which is unfavorable for the sun.

- Quadratic algorithm is only approximate, but it easily allows to render realistically blazing sun.

Effects

The HDR rendering is very practical for post-processing of a higher quality, compared to conventional means. Below are examples of the effects that are usually applied during the HDR rendering.

Bloom or Glow

This effect is used to reproduce artifacts of real-world cameras. This effect produces margins of light around very bright objects. This is one of the most popular effects now.

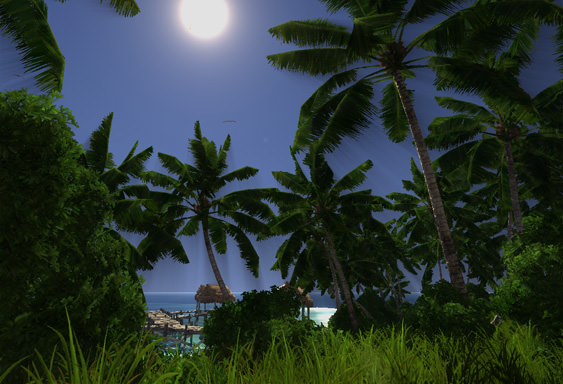

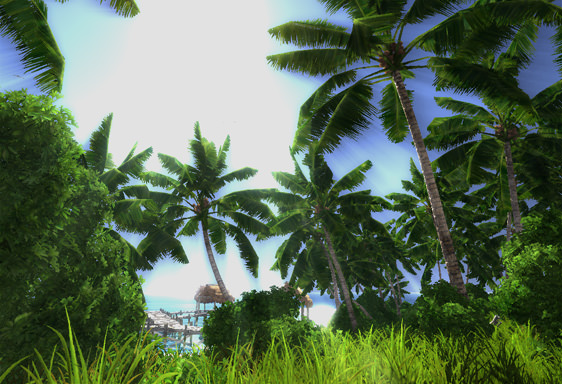

Adaptive Exposure

This effect measures exposure in different moments of time and imitates eye adaption between these moments. The effect benefits from the HDR rendering a lot.

Cross Flares

This effect reproduces star-like flares that appear when photographing bright lights or reflections with a camera that uses a special filter.

Lens Flares

This effect reproduces a real-life camera artifact, which appears when the light is scattered in lens systems due to internal reflection and surface irregularities of the lens. It occurs, when an image includes a very bright light source.

Tone Mapping

Screens themselves have a low dynamic range, though not so low as the RGB model provides. The specified contrast ratio of most monitors varies between 300:1 and 1000:1, however, the contrast of real content under normal viewing conditions is significantly lower. To convert an image rendered in HDR to LDR, a special color mapping technique is used. It is called tone mapping. Usually, tone mapping is non-linear in order to keep enough range for dark colors and to gradually limit bright colors. How exactly this transformation is carried out, depends on a specific tone mapping operator.

Drawbacks

Despite the popularity of the HDR rendering it has some drawbacks that one should not forget about:

- This kind of rendering consumes more graphics memory than the LDR rendering.

- The HDR rendering is not supported by older graphics cards.

- Loss of performance due to heavier loads on a memory bus when working with HDR textures and because of larger amounts of data to process.

- Combined use of the HDR rendering and anti-aliasing is possible only for the last generation graphics cards, as anti-aliasing also requires a larger screen buffer (though in terms of pixel resolution rather than bit depth).